Proceedings

The proceedings of the conference were published by the IEEE Computer Society (CPS services) in the publication called:

2nd IEEE International Workshop on High-Performance Interconnection Networks in the Exascale and Big-Data Era HiPINEB@HPCA 2016, Barcelona, Spain, March 12, 2016. IEEE Computer Society 2016, ISBN 978-1-5090-2121-5

This edition is indexed in IEEEXplore, IEEE CDSL and DBLP. More information in the following links:

- http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=7457591

- https://www.computer.org/csdl/proceedings/hipineb/2016/2121/00/index.html

- http://dblp.uni-trier.de/db/conf/hpca/hipineb2016.htm

Program Highlights

- Keynote: Given by the professor Jose Duato (Technical University of Valencia, Spain)

- Panel: “Large scale routing vs. congestion control: will it be central (SDN) or locally adaptive?”, combining the vision of both academia and the industry

- Technical sessions: 8 high-quality presentations of the latest research work in interconnection networks. (Authors of full papers have 20 minutes for their presentations, plus 2 minutes for questions from the audience. Short papers authors have 10 minutes for their presentation, and 5 minutes for questions)

Agenda

8:45 – 9:00 – Presentation

- HiPINEB 2016 Opening

Jesus Escudero-Sahuquillo, University of Castilla-La Mancha, Spain

Pedro Javier Garcia, University of Castilla-La Mancha, Spain

9:00 – 10:30 – Keynote

Chairman: Francisco J. Quiles, University of Castilla-La Mancha, Spain

- Some architectural solutions for Exascale interconnects

Jose Duato, Technical University of Valencia

10:30 – 11:00 – Coffee Break

11:00 – 12:30 – Technical Sessions 1

Chairman: Francisco J. Alfaro, University of Castilla-La Mancha, Spain

A) Interconnects Architecture, Topology and Routing

- A New Fault-Tolerant Routing Methodology for KNS Topologies

Roberto Peñaranda, Ernst Gunnar Gran, Tor Skeie, Maria Engracia Gomez and Pedro Lopez - Exploring Low-latency Interconnect for Scaling Out Software Routers

Sangwook Ma, Joongi Kim and Sue Moon - Transitively Deadlock-Free Routing Algorithms

Jean-Noël Quintin and Pierre Vignéras

B) Energy Efficiency

- Analyzing the Energy (Dis-)Proportionality of Scalable Interconnection Networks

Felix Zahn, Pedro Yebenes, Steffen Lammel, Pedro J. Garcia and Holger Fröning

12:30 – 14:00 – Lunch

14:00 – 15:30 – Technical Sessions 2

Chairman: Eitan Zahavi, Mellanox Technologies, Israel

C) Virtualization, Quality-of-Service and Congestion Control

- Providing Differentiated Services, Congestion Management, and Deadlock Freedom in Dragonfly Networks

Pedro Yebenes Segura, Jesus Escudero-Sahuquillo, Pedro Javier Garcia,Francisco J. Alfaro and Francisco J. Quiles - Remote GPU Virtualization: Is It Useful?

Federico Silla, Javier Prades, Sergio Iserte and Carlos Reaño

D) Performance Evaluation and Simulation Tools

- Application performance impact on trimming of a full fat tree InfiniBand fabric

Siddhartha Ghosh, Davide Delvento, Rory Kelly, Irfan Elahi, Nathan Rini, Storm Knight, Benjamin Matthews, Thomas Engel, Benjamin Jamroz and Shawn Strande - Combining OpenFabrics Software and Simulation Tools for Modeling InfiniBand-based Interconnection Networks

German Maglione Mathey, Pedro Yebenes Segura, Jesus Escudero-Sahuquillo, Pedro Javier Garcia and Francisco J. Quiles

15:30 – 16:00 – Coffee Break

16:00 – 17:30 – Panel

Chairman: Pedro Javier Garcia, University of Castilla-La Mancha, Spain

Large scale routing vs. congestion control: will it be central (SDN) or locally adaptive?

Panelists:

- Torsten Hoefler, ETH Zurich, Switzerland

- Mitch Gusat, IBM, Switzerland

- Maria Engracia Gomez, Technical University of Valencia, Spain

Keynote

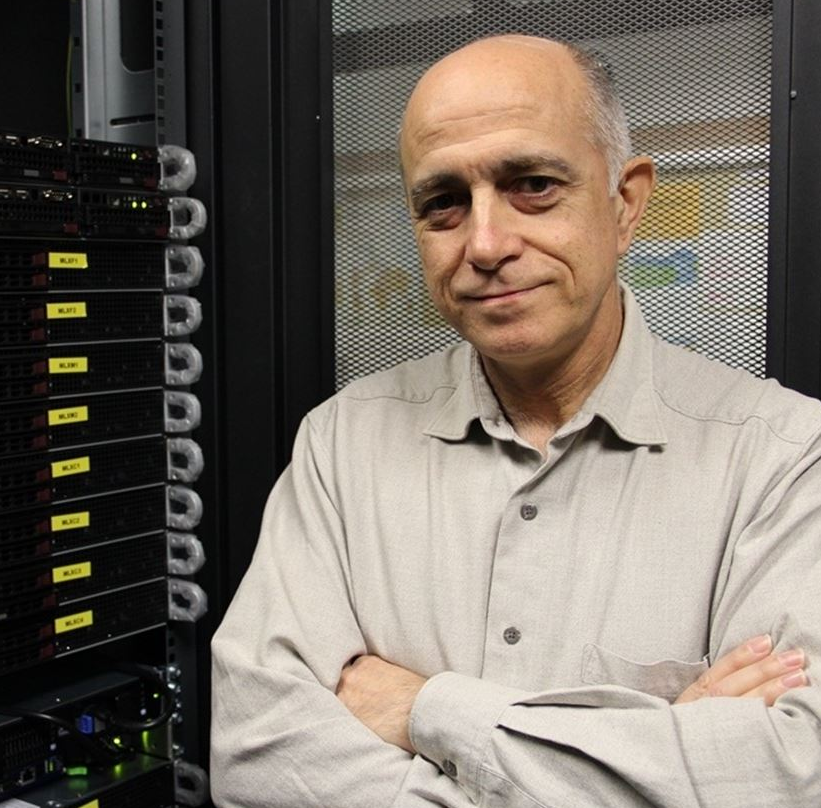

Some architectural solutions for Exascale interconnects

Jose Duato Marin (Technical University of Valencia, Spain)

Jose Duato is Professor in the Department of Computer Engineering (DISCA) at the Technical University of Valencia.His current research interests include interconnection networks, on-chip networks, and multicore and multiprocessor architectures. He published over 500 refereed papers. According to Google Scholar, his publications received more than 11,000 citations. He proposed a theory of deadlock-free adaptive routing that has been used in the design of the routing algorithms for the Cray T3E supercomputer, the on-chip router of the Alpha 21364 microprocessor, and the IBM BlueGene/L supercomputer. He also developed RECN, a scalable congestion management technique, and a very efficient routing algorithm for fat trees that has been incorporated into Sun Microsystem’s 3456-port InfiniBand Magnum switch. Prof. Duato led the Advanced Technology Group in the HyperTransport Consortium, and was the main contributor to the High Node Count HyperTransport Specification 1.0. He also led the development of rCUDA, which enables remote virtualized access to GP-GPU accelerators using a CUDA interface. Prof. Duato is the first author of the book “Interconnection Networks: An Engineering Approach”. He also served as a member of the editorial boards of IEEE Transactions on Parallel and Distributed Systems, IEEE Transactions on Computers, and IEEE Computer Architecture Letters. Prof. Duato was awarded with the National Research Prize in 2009 and the “Rey Jaime I” Prize in 2006.

Panel

Large scale routing vs. congestion control: will it be central (SDN) or locally adaptive?

Chairman: Pedro Javier Garcia, University of Castilla-La Mancha, Spain

Panelists:

- Torsten Hoefler, ETH Zurich, Switzerland

- Mitch Gusat, IBM Zurich, Switzerland

- Maria Engracia Gomez, Technical University of Valencia, Spain

Torsten Hoefler is an Assistant Professor of Computer Science at ETH Zürich, Switzerland. Before joining ETH, he led the performance modeling and simulation efforts of parallel petascale applications for the NSF-funded Blue Waters project at NCSA/UIUC. He is also a key member of the Message Passing Interface (MPI) Forum where he chairs the “Collective Operations and Topologies” working group. Torsten won best paper awards

at the ACM/IEEE Supercomputing Conference SC10, SC13, SC14, EuroMPI 2013, IPDPS 2015, and other conferences. He published numerous peer-reviewed scientific conference and journal articles and authored chapters of the MPI-2.2 and MPI-3.0 standards. He received the Latsis award of ETH Zurich as well as an ERC starting grant in 2015. His research interests revolve around the central topic of “Performance-centric System Design” and include scalable networks, parallel programming techniques, and performance modeling. Additional information about Torsten can be found on his homepage at htor.inf.ethz.ch.

Mitch Gusat is a researcher and Master Inventor at the IBM Zurich Research Laboratory. His current focus is on datacenter and cloud fabrics, virtual networking and their feedback control and performance, modeling of distributed systems, SDN, scheduling, switching and lossless datacenter networks beyond 400Gbps including their flow and congestion control, adaptive routing, workload optimization and monitoring. In this area he has actively led or contributed to the design and standardization of Converged Enhanced Ethernet/802 DCB, InfiniiBand and RapidIO – while also advising his Master and PhD students from several European universities. His other research interests include control, optimization, SDN, HPC interconnection networks, shared (virtual) memory, real-time scheduling, high performance protocols and IO acceleration. Previously he was a Research Associate at the University of Toronto where he contributed to the design and construction of NUMAchine, a 64-way cache-coherent computer. In a former lifetime, Mitch was student and then researcher at the “Politehnica” University of Timisoara, where he has designed multiprocessor systems, parallel video interfaces, algorithms and image processors for Nuclear Cardiology. He holds Masters in CE, resp. EE, from the above universities. He is member of ACM, IEEE, and holds a few dozen patents related to SDN, transports, HPC architectures, switching and scheduling.

Mitch Gusat is a researcher and Master Inventor at the IBM Zurich Research Laboratory. His current focus is on datacenter and cloud fabrics, virtual networking and their feedback control and performance, modeling of distributed systems, SDN, scheduling, switching and lossless datacenter networks beyond 400Gbps including their flow and congestion control, adaptive routing, workload optimization and monitoring. In this area he has actively led or contributed to the design and standardization of Converged Enhanced Ethernet/802 DCB, InfiniiBand and RapidIO – while also advising his Master and PhD students from several European universities. His other research interests include control, optimization, SDN, HPC interconnection networks, shared (virtual) memory, real-time scheduling, high performance protocols and IO acceleration. Previously he was a Research Associate at the University of Toronto where he contributed to the design and construction of NUMAchine, a 64-way cache-coherent computer. In a former lifetime, Mitch was student and then researcher at the “Politehnica” University of Timisoara, where he has designed multiprocessor systems, parallel video interfaces, algorithms and image processors for Nuclear Cardiology. He holds Masters in CE, resp. EE, from the above universities. He is member of ACM, IEEE, and holds a few dozen patents related to SDN, transports, HPC architectures, switching and scheduling.

María Engracia Gómez received the MS and PhD degrees in computer science from the Universitat Politècnica de València, Spain, in 1996 and 2000, respectively. She joined the Department of Computer Engineering (DISCA) at Universitat Politècnica de València in 1996 where she is currently an associate professor of computer architecture and technology. Her research interests are in the fields of interconnection networks, networks-on-chips, memory hierarchy and cache coherence protocols. She has published more than 60 refereed conference and journal papers. She has served on program committees for several major conferences.

María Engracia Gómez received the MS and PhD degrees in computer science from the Universitat Politècnica de València, Spain, in 1996 and 2000, respectively. She joined the Department of Computer Engineering (DISCA) at Universitat Politècnica de València in 1996 where she is currently an associate professor of computer architecture and technology. Her research interests are in the fields of interconnection networks, networks-on-chips, memory hierarchy and cache coherence protocols. She has published more than 60 refereed conference and journal papers. She has served on program committees for several major conferences.

Technical Session Paper Abstracts

A) Interconnects Architecture, Topology and Routing

A New Fault-Tolerant Routing Methodology for KNS Topologies

Roberto Peñaranda, Ernst Gunnar Gran, Tor Skeie, Maria Engracia Gomez and Pedro Lopez

Exascale computing systems are being built with thousands of nodes. A key component of these systems is the interconnection network. The high number of components significantly increases the probability of failure. If failures occur in the interconnection network, they may isolate a large fraction of the machine. For this reason, an efficient fault-tolerant mechanism is needed to keep the system interconnected, even in the presence of faults. A recently proposed topology for these large systems is the hybrid KNS family that provides supreme performance and connectivity at a reduced hardware cost. This paper present a fault-tolerant routing methodology for the KNS topology that degrades performance gracefully in the presence of faults and tolerates a reasonably large number of faults without disabling any healthy node. In order to tolerate network failures, the methodology uses a simple mechanism: for some source-destination pairs, only if necessary, packets are forwarded to the destination node through a set of intermediate nodes (without being ejected from the network) which allow avoiding faults. The evaluation results shows that the methodology tolerates a large number of faults. Furthermore, the methodology offers a gracious performance degradation. For instance, performance degrades only 1% for a 2D-network with 1024 nodes and 1% faulty links.

Back to top

Exploring Low-latency Interconnect for Scaling Out Software Routers

Sangwook Ma, Joongi Kim and Sue Moon

We propose and evaluate RoCE (RDMA over Converged Ethernet) as a low-latency back-plane for horizontally scaled software router nodes. By exploring combinations of design choices in developing internal fabric for software routers, we select a set of parameters and packet I/O APIs that yield the lowest latency and highest throughput. Using the optimal settings derived, we measure and compare latency and throughput of an RoCE interconnect against Ethernet using a high-performance userspace network driver (Intel DPDK). Our comparison shows that RoCE keeps low latency in all packet sizes while it has throughput penalties for network workloads (e.g., small packet sizes). To mitigate throughput penalties imposed by guaranteeing low latency, we suggest a hardware-assisted, batched forwarding scheme based on scatter-and-gather functionality of RDMA capable NICs. When forwarding ingress network packets, our scheme achieves comparable to or higher throughput then Ethernet at the cost of several microseconds of latency.

Back to top

Transitively Deadlock-Free Routing Algorithms

Jean-Noël Quintin and Pierre Vignéras

In exascale platforms, faults are likely to occur more and more frequently due to the huge number of components. To handle them, the BXI fabric management uses a generic architecture that specifies two distinct modes of operations: offline mode computes, validates and uploads nominal routing tables; while online mode reacts at runtime to failures and recoveries by computing small patches and by uploading them to the concerned switches. This design presented in a previous article, helps limiting both the computation time and the failure impact on the whole fabric. However, in wormhole switching such as with BXI, uploading new routing tables at runtime is known to be generally deadlock-prone. This paper thus introduces a new property of online routing algorithms called transitively deadlock-free and presents the formal description of two BXI online routing algorithms holding this property. It also shows that the combination of a deadlock-free offline routing algorithm with a transitively deadlock-free online one provides a fault-tolerant deadlock-free routing algorithm. The theory is flexible enough to be adapted to other routing algorithms and to other topologies.

Back to top

B) Energy Efficiency

Analyzing the Energy (Dis-)Proportionality of Scalable Interconnection Networks

Felix Zahn, Pedro Yebenes, Steffen Lammel, Pedro J. Garcia and Holger Froening

Power consumption is one of the most important aspects regarding design and operation of large computing systems, such as High-Performance Computing (HPC) and cloud installations. Various hard constraints exist due to technical, economic and ecological reasons. We will show that interconnection networks contribute substantially to power consumption, even though their peak power rating is low compared to other components. Moreover, networks are still not energy-proportional, opposed to other components such as processors. In fact, network links consume the same amount of energy whether they are in use or not. In this work, we analyze the potential of power savings in highperformance direct interconnection networks. First, by analyzing the power consumption of today’s network switches we find that network links contribute most to a switch’s power, but they behave differently than other components like processors regarding possible power saving. We extend a OMNeT++ based interconnection network simulator with link power models to asses power savings. Our early experiments, based on traces of the NAMD and Graph500 applications show an immense potential for power saving, as we observe long inactivity periods. However, in order to design effective power saving strategies it is necessary to come to a detailed understanding of different hardware parameters. The transition time, which is the time required to reconfigure a link, could be crucial for most strategies. We see our OMNeT++ based, energy-aware simulator as a first step towards a deeper knowledge regarding such constraints.

Back to top

C) Virtualization, Quality-of-Service and Congestion Control

Providing Differentiated Services, Congestion Management, and Deadlock Freedom in Dragonfly Networks

Pedro Yebenes Segura, Jesus Escudero-Sahuquillo, Pedro Javier Garcia, Francisco Alfaro and Francisco J. Quiles

High-Performance Computing (HPC) systems and Datacenters are growing in size to meet the performance required by the applications. This makes the interconnection network a central element in these systems, which must provide high communication bandwidth and low latency. One of the most important aspects in the design of interconnection networks is their topology. In that sense, the Dragonfly topology is nowadays very popular as it offers high scalability, low diameter, diversity of paths, high bisection bandwidth, etc. Routing in Dragonfly topologies is an important issue, since deadlocks must be prevented, usually by means of escape ways to break cycles. However, even with a deadlock-free routing, the performance of Dragonfly topologies degrades when the negative effects of congestion appear (e.g. the Head-of-Line -HoL- blocking). Hence, some techniques are used to reduce HoL blocking, usually based on separating traffic flows into different queues implemented by means of Virtual Channels (VCs) or similar structures. VCs are also used to implement differentiated-services provision in systems supporting applications with different levels of priority. The combination of HoL-blocking reduction and differentiated services provision through an integrated management of VCs has been previously studied. However, this integrated management of VCs does not consider the additional use of VCs as escape ways, thus it cannot be applied to Dragonfly topologies. For that reason, in this paper, we adapt the integrated management of VCs to Dragonfly topologies, so that by using a reduced number of VCs, our proposal is able to offer differentiated-services provision, HoL-blocking reduction and deadlock freedom. Performance results, obtained through simulation experiments in medium-to-large size Dragonfly networks, show that the proposed technique outperforms other solutions.

Back to top

Remote GPU Virtualization: Is It Useful?

Federico Silla, Javier Prades, Sergio Iserte and Carlos Reaño

Graphics Processing Units (GPUs) are currently used in many computing facilities. However, GPUs present several side effects, such as increased acquisition costs as well as larger space requirements. Also, GPUs still require some amount of energy while idle and their utilization is usually low. In a similar way to virtual machines, using virtual GPUs may address the mentioned concerns. In this regard, remote GPU virtualization allows to share the GPUs present in the computing facility among the nodes of the cluster. This would increase overall GPU utilization, thus reducing the negative impact of the increased costs mentioned before. Reducing the amount of GPUs installed in the cluster could also be possible. In this paper we explore some of the benefits that remote GPU virtualization brings to clusters. For instance, this mechanism allows an application to use all the GPUs present in a cluster. Another benefit of this technique is that cluster throughput, measured as jobs completed per time unit, is doubled when this technique is used. Furthermore, in addition to increasing overall GPU utilization, total energy consumption is reduced up to 40%. This may be key in the context of exascale computing facilities, which present an important energy constraint.

Back to top

D) Performance Evaluation and Simulation Tools

Application performance impact on trimming of a full fat tree InfiniBand fabric

Siddhartha Ghosh, Davide Delvento, Rory Kelly, Irfan Elahi, Nathan Rini, Storm Knight, Benjamin Matthews, Thomas Engel, Benjamin Jamroz and Shawn Strande

We measured InfiniBand traffic in our full fat tree fabric and measured performance impact of trimming the fabric on our major application kernels. Based on traffic pattern analysis and application performance impact we infer that a 2:1 trimmed fat tree is a cost effective alternative to a full fat tree for this specific set of applications. The methodology we used may be useful for others who are performing design trade-offs for HPC systems. We also propose that switch hardware vendors design director class switches with trimmed fat tree options that optimize per port costs.

Back to top

Combining OpenFabrics Software and Simulation Tools for Modeling InfiniBand-based Interconnection Networks

German Maglione Mathey, Pedro Yebenes Segura, Jesus Escudero-Sahuquillo, Pedro Javier Garcia and Francisco J. Quiles

The design of interconnection networks is becoming extremely important for High-Performance Computing (HPC) systems in the Exascale Era. Design decisions like the selection of the network topology, routing algorithm, fault tolerance and/or congestion control are crucial for the network performance. Besides, the interconnection network designers are also focused on creating middleware layers compatible to different network technologies, which make it possible for these technologies to interoperate. One example is the OpenFabrics Software (OFS) used in HPC for breakthrough applications that require high efficiency computing, wire-speed messaging, microsecond latencies and fast I/O for storage and file systems. OFS is compatible with several HPC interconnect technologies, like InfiniBand, iWarp or RoCE. One challenge in the design of new features for improving the interconnection network performance is to model in specific simulation tools the latency introduced by the OFS modules into the network traffic. In this paper, we present a work-in-progress methodology to combine the OFS middleware with OMNeT++- based simulation tools, so that we can use some of the OFS modules, like OpenSM or ibsim, combined with simulation tools. We also propose a set of tools for analyzing the properties of different network topologies. Future work will consist on modeling other OFS modules functionality in network simulators.

Back to top